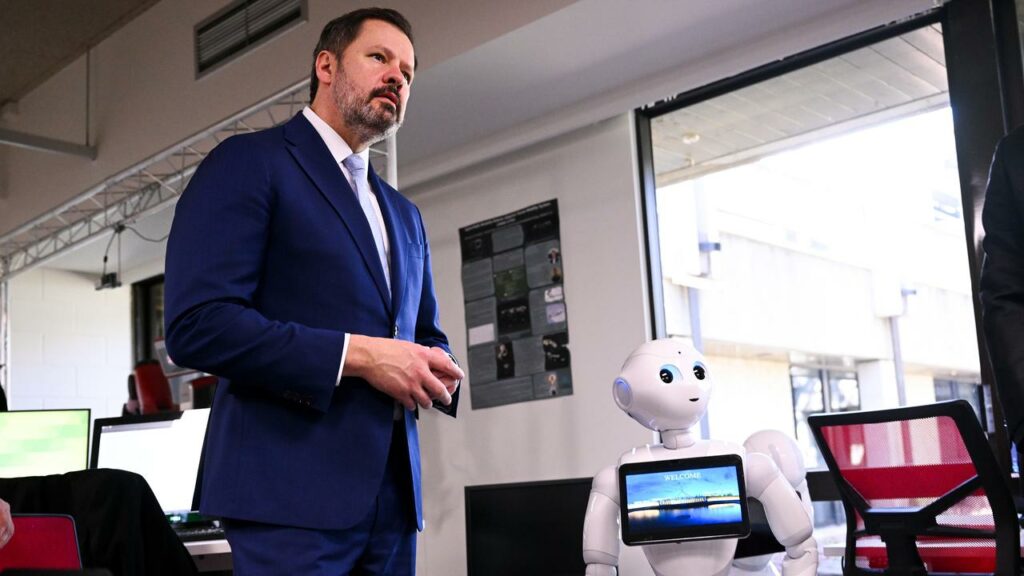

‘Trust and transparency’ critical for growing AI sector

Andrew Brown |

Regulations on the use of artificial intelligence could put mandatory safeguards in place for its use in high-risk industries.

The federal government is considering laws surrounding the use of AI to ensure stronger protections as the technology rapidly develops.

It follows the release of the government’s interim response to industry consultation on the responsible use of AI.

Among the measures being considered are safeguards for using AI in potential high-risk industries such as critical infrastructure like water and electricity, health and law enforcement.

Industry Minister Ed Husic said the government was considering if new laws were needed or existing measures adapted to “get the balance right”.

He said this included testing AI models before they were released or during their operation, and making the sector transparent.

“Holding people and organisations in particular to account where things do not operate in the way that they were intended,” he told ABC TV.

Talks are already under way with the industry on developing a voluntary safety standard for AI, before the development of mandatory safeguards.

Possible safeguards could include how the products are tested before and after their use, along with further transparency on how they are designed and used data.

The voluntary labelling and watermarking of AI-generated material is also being considered.

Mr Husic said it was critical to put protections in place.

“The Albanese government moved quickly to consult with the public and industry on how to do this, so we start building the trust and transparency in AI that Australians expect,” he said.

“We want safe and responsible thinking baked in early as AI is designed, developed and deployed.”

The interim response paper said while many uses of AI did not present risks that required oversight, there were still significant areas of concern.

“Existing laws do no adequately prevent AI-facilitated harms before they occur, and more work is needed to ensure there is an adequate response to harms after they occur,” the report said.

“The current regulatory framework does not sufficiently address known risks presented by AI systems, which enable actions and decisions to be taken at a speed and scale that hasn’t previously been possible.”

More than 500 groups responded to the government’s discussion paper on AI, including tech giants and industry experts.

The Australian Information Industry Association welcomed the discussion paper, saying the government needed to work with international frameworks to ensure Australia did not get left behind.

A report from the association said 34 per cent of Australians were willing to trust AI technology, with 71 per cent believing guardrails needed to be put in place by government.

Chief executive Simon Bush said the government needed to take advantage of the growth of AI.

“The regulation of AI will be seen as a success by industry if it builds not only societal trust in the adoption and use of AI by its citizens and businesses, but also that it fosters investment and growth in the Australian AI sector,” he said.

AAP